As most of you already know, I'm always trying to push the limits of Cameras to make them better, faster, and easy to use with VisionCamera.

For those of you who don't know, VisionCamera is a React Native library that allows you to use the native platform Camera using simple JS-based APIs while still providing full flexibility and control over the hardware sensors and high performance matching the speed of a fully native app.

iOS

In this blog-post I want to focus on Android, but just for reference I will quickly explain the architecture on the native iOS side.

On iOS, all Audio/Video APIs (including the Camera) are exposed through the AVFoundation API. I use Swift for all the implementation, and I can easily go through Objective-C++ to work with C++ APIs.

- I create an

AVCaptureDevice.DiscoverySessionto find anAVCaptureDevice(e.g. back wide-angle camera) - I create an

AVCapturePreviewLayerthat renders the Camera to the screen - I create an

AVCaptureSessionand pass the input (AVCaptureDevice) and the output (AVCapturePreviewLayer) - When I call

.startCaptureSession(), it starts streaming Frames from the Camera

This works as expected, is simple to work with and provides full flexibility for all Camera features.

In other words; every app can rebuild the stock iOS Camera app using AVFoundation.

It even supports multi-zoom out of the box!

Android

On Android, things get a little bit more tricky.

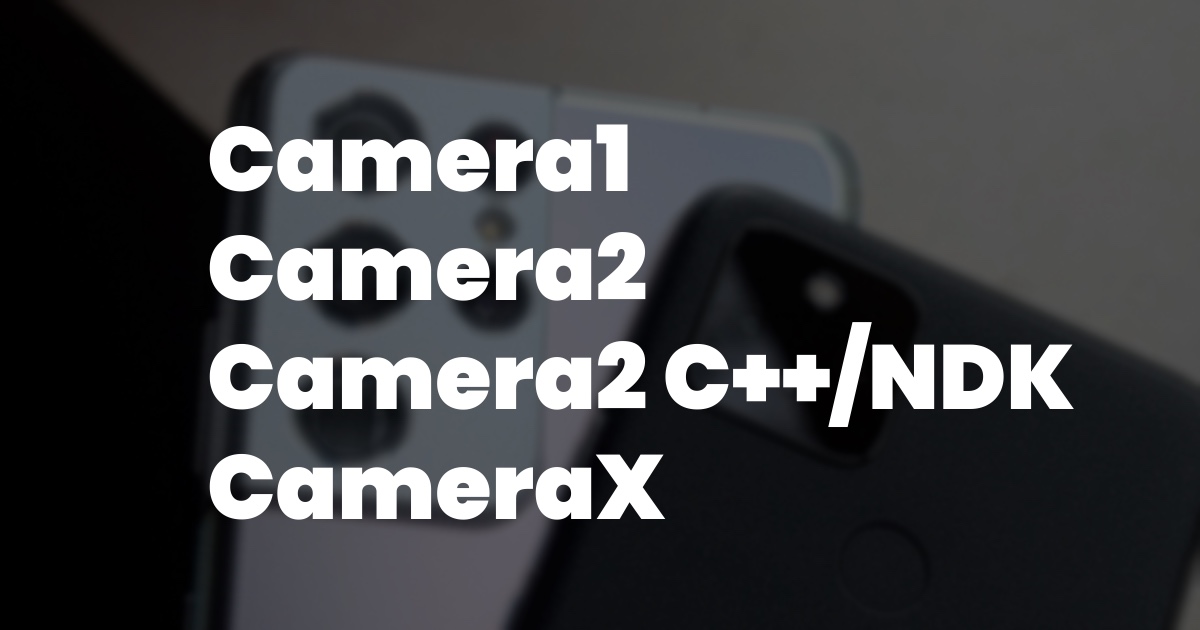

You have three options;

- Camera1

- Camera2

- CameraX

Let's go through them one by one:

Camera1

This was the default Camera API starting in Android 1, and has now been deprecated in API 21. (At the time of writing this blog-post, we're already at API 33, so it's a really old API).

I haven't really used Camera1, but from it's documentation (and other people's opinions) it looks very simple. The API is easy to understand and you can get started quite quickly.

However, since Android runs on so many different devices, it was hard to support advanced features and extend the API without introducing breaking changes.

For example, Camera1 did not support multi-cams since phones back then only had one camera at the back, and maybe one camera at the front. Some phones today even have 5 different cameras at the back!

![]()

Camera1 was simple, but not developer-friendly. To configure a Camera session, you would pass a set of Camera.Parameters, but if any of those parameters is invalid, the Camera just crashes with the message "setParameters failed". It was nearly impossible to find out what Parameters actually work, without knowing the hardware you build for.

You needed to think about tons of edge-cases, figure out workarounds for different devices, and more.

Also, lots of the API documentation was just plain wrong and unreliable - for example, there is a function that returns a list of supported photo sizes:

/**

* Gets the supported picture sizes.

*

* @return a list of supported picture sizes.

* This method will always return a list with at least one element.

*/

public List<Size> getSupportedPictureSizes()Funnily enough, This method will always return a list with at least one element is simply not true and this method returns null on a Samsung S3 Mini. huh.

Additionally, tap to focus was weird to implement since it always used a custom coordinate system mapped to [-1000, 1000], instead of just the Camera size or 0-1. Why? No one knows.

Overall, the API was simple (and weird), but it didn't have many features and is deprecated now anyways.

Camera2

In order to catch up with Apple's Camera, Android API 21 introduced a new Camera API, Camera2! Camera2 introduced a whole new API that was based on states and callbacks to give the user more control over the Camera hardware. Coming from Apple development, I'm not a big fan of Google's APIs, and Camera2 is no exception. It's weird and extremely verbose.

A simple look at this table is enough to understand the complexity of Camera2: CaptureResult.CONTROL_AE_STATE table (there's 18 possible states for auto-exposure).

To help you with the timeline, this was around the time the Samsung Galaxy S5 was released:

While Apple was light-years ahead with the iPhone 6:

There's hidden surprises throughout the entire Camera2 API - and it already starts when showing the Camera Preview since the Frames are rotated by 90-degrees. To get that "fixed", you'll have to apply a Matrix rotation transformation. Why does the Preview not rotate the Frames to app-orientation by default? No one knows.

Taking a picture is actually fairly simple, but since Android runs on many different devices, some APIs that you find in your stock Camera app simply aren't accessible. For example, Samsung phones have Bokeh, HDR, and Night modes that offer some live- and post-processing for better quality photos. It was simply not possible to use those in your own apps, you'd have to re-implement the whole thing (and since those are at HAL/C++ level, this was pretty much impossible). Now, Camera2 has an extensions API which most phones implement, but again, not all of them.

Recording videos is a bit more tricky and it's quite hard to run realtime frame processing, e.g. to implement your own MediaRecorder, analyse frames, apply color filters and more.

I believe most of those difficulties are caused by the Java part - data comes from the hardware (HAL/C++), and has to be wrapped using JNI to be used in Java. Using C++ here would grant you full control over realtime frame processing and hardware control, but hey, who wants to write apps with C++?

Overall, Camera2 is a somewhat powerful but insanely hard to use API. That's why there's so many wrappers around Camera2:

- https://github.com/natario1/CameraView

- https://github.com/CameraKit/camerakit-android

- https://github.com/RedApparat/Fotoapparat

CameraKit even implements the Preview in C++: CameraSurfaceView.cpp

Camera2 C++/NDK

Since Android API 23, Camera2 can now also be accessed from the NDK (native development kit, aka "the C++ side on Android").

void(* ACameraCaptureSession_captureCallback_start)(

void *context,

ACameraCaptureSession *session,

const ACaptureRequest *request,

int64_t timestamp)Since VisionCamera is a library for React Native (which uses C++ to expose functionality to JS) writing it directly in C++ skips the Java step entirely.

- Java React Native Module: JS -> C++ -> Java -> C++ -> JS

- C++ React Native Module: JS -> C++ -> JS

This comes with a performance benefit, as we don't have to go through JNI (the Java/C++ interface) anymore.

Also, implementing the Preview in C++ gives you full control over frame pocessing, e.g. for rendering Skia filters, symbols and shaders, drawing with OpenGL and more.

Camera2 C++/NDK sounds like a good fit for VisionCamera, but the Camera2 Java part has many workarounds, device-specific fixes, and other helper functions implemented that we need to fully rebuild from scratch if I were to use Camera2 C++ for VisionCamera. To name a few:

- The Extensions API (Vendor Bokeh-, HDR- and Night-modes) does not work since it is implemented in Camera2 Java (no HDR)

- LEGACY cameras won't work. LEGACY cameras are Camera1 devices that are supported through a Camera2 wrapper. There are no official numbers on how many phones are affected by this, but I'd assume ~5-10% of Android phones still have LEGACY cameras.

- The Preview View is written in Java and has to be re-implemented using OpenGL.

CameraX

After developers complained about the complexity of Camera2, Google built CameraX as part of the Android Jetpack libraries.

CameraX' goal is to simplify Camera2's API, while still providing full control for advanced use-cases.

It's API uses a somewhat-declarative "use-case" design, meaning you tell CameraX what you want to achieve, and it internally tries to find the perfect format, device and output combinations. There's 4 different use-cases out of the box:

- Preview: View an image on the display.

- Image capture: Save images.

- Video capture: Save video and audio.

- Image analysis: Access a buffer seamlessly for use in your algorithms, such as to pass to ML Kit.

You'll be surprised once again, since not every device can attach every use-case.

Almost no Android phones support all 4 use-cases at the same time, so you have to make some compromises. For example, you could attach only:

- Preview

- Video capture

- Image analysis

..and then when taking a photo you just take a screenshot of the Preview view. This is also much faster than taking a photo, since taking a photo is by far not as quick as it is on iOS. On Android this could even take multiple seconds to complete.

Fun Fact: This is how Snapchat works on many Android devices.

And then there's some phones that don't even support 3 use-cases at the same time. So you'd have to make yet another compromise:

- Video capture

- Image analysis

And then draw frames to the Preview view in the Image analysis callback.

I call them compromises and not workarounds, since theres obvious drawbacks with those approaches. Taking a screenshot instead of a photo has much lower quality and doens't support any extensions (Bokeh, HDR, Portrait, Night, ...).

Drawing to the Preview in the Image analysis callback also comes with a drawback since you have to do both things in one Frame, otherwise a Frame will be dropped and the Preview lags. Also, Image analysis is now forced to the same resolution as the Preview, or you will have to introduce an arbitary frame resize.

Even though CameraX doesn't feel production ready at all, Google recommends using it for new apps.

Lots of functionality is still missing, such as multi-cam support, switching from front to back camera while recording, etc.

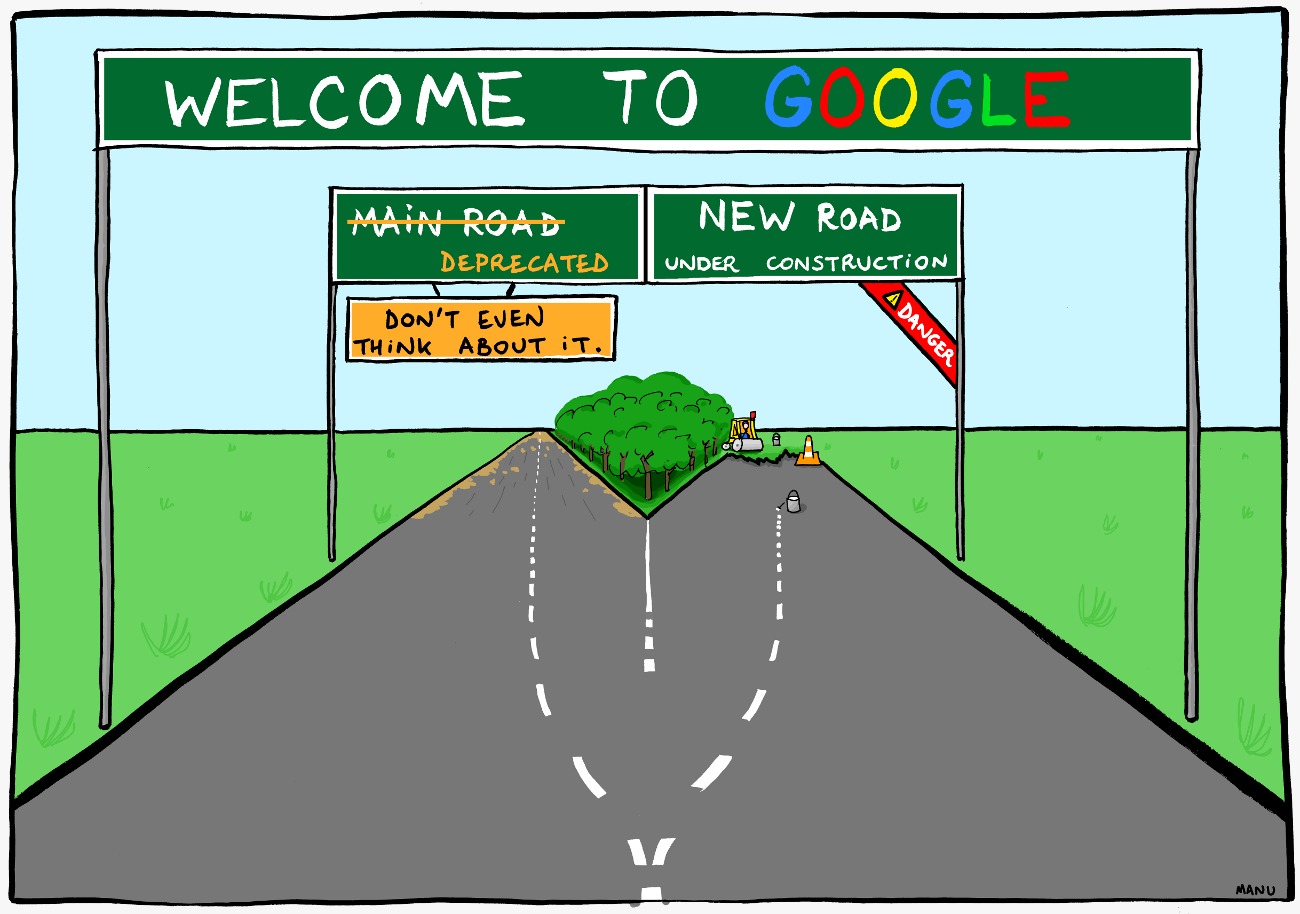

After talking to a friend (ex-googler) about this, he sent me this meme:

In my opinion, this sums the entire Camera situation on Android up pretty well.

For VisionCamera

I decided to go with CameraX for the VisionCamera Android implementation, in hopes of simplifying all of this.

It turned out that I shot myself in the foot with this, as I now have a huge // TODO list in the Android codebase and a ton of workarounds just waiting to be implemented upstream at CameraX, but at the current development speed I expect this to take months or years.

VisionCamera works great on iOS, and as good as it can on Android.

In the VisionCamera V3 ✨ efforts I have been thinking about a full rewrite from CameraX to Camera2 C++/NDK, but this is extremely tricky and will probably introduce a ton of breaking changes to a few Android devices.

On Android it always comes down to one question; Do you want to be innovative with fancy new features, or do you want your app to run on old phones?

Conclusion

- Camera APIs on iOS are powerful and fast, but a bit tricky to work with.

- Camera APIs on Android are not as powerful and terrible to work with.

If you appreciate all the work I did with VisionCamera to abstract those painful APIs into a nice and simple JS API, leave a star on the repo ⭐️ and consider sponsoring me on GitHub ❤️ so I can continue to improve this in my free time.